|

1. S.Y. Chen, Jianwei Zhang, Houxiang Zhang, N.M. Kwok, Y.F. Li, "Intelligent Lighting Control for Vision-Based Robotic Manipulation", IEEE Transactions on Industrial Electronics, Vol. 59, No.8, 2012, pp. 3254 - 3263. [SCI: 922KL (IF=5.1/5.5, TOP1)] (HTML, DOI) [长文:视觉机器人系统的智能光照控制] |

|

|

Abstract—The ability of a robot vision system to capture informative images is greatly affected by the condition of lighting in the scene. This work reveals the importance of active lighting control for robotic manipulation and proposes novel strategies for good visual interpretation of objects in the workspace. Good illumination means that it helps to get images with large signal-to-noise ratio, wide range of linearity, high image contrast, and true color rendering of the object’s natural properties. It should also avoid occurrences of highlight and extreme intensity unbalance. If only passive illumination is used, the robot often gets poor images where no appropriate algorithms can be used to extract useful information. A fuzzy controller is further developed to maintain the lighting level suitable for robotic manipulation and guidance in dynamic environments. As carried out in this research, with both examples of numerical simulations and practical experiments, it promises satisfactory results with the proposed idea of active lighting control. |

|

|

|

|

|

2. S.Y. Chen, "Kalman Filter for Robot Vision: a Survey", IEEE Transactions on Industrial Electronics, Vol. 59, No.11, 2012, pp. 4409 - 4420. [SCI: 965EC (IF=5.1/5.5, TOP1)] (HTML, DOI) [长文:卡尔曼滤波器与机器人视觉] |

|

|

Abstract—Kalman filters have received much attention with the increasing demands for robotic automation. This paper briefly surveys the recent developments for robot vision. Among many factors that affect the performance of a robotic system, Kalman filters have made great contributions to vision perception. Kalman filters solve uncertainties in robot localization, navigation, following, tracking, motion control, estimation and prediction, visual servoing and manipulation, as well as structure reconstruction from a sequence of images. In the 50th anniversary, we have that more than 20 kinds of Kalman filters have been developed so far. These include extended Kalman filters and unscented Kalman filters. In the last 30 years, about 800 publications have reported the capability of these filters in solving robot vision problems. Such problems encompass a rather wide application area, such as object modeling, robot control, target tracking, surveillance, search, recognition, and assembly, as well as robotic manipulation, localization, mapping, navigation, and exploration. These reports are summarized in this review to enable easy referral to suitable methods for practical solutions. Representative contributions and future research trends are also addressed in an abstract level. |

Including Kalman filters: (1) Kalman filter ; (2) Self-tuning Kalman filter ; (3) Steady-state Kalman filter ; (4) Ensemble Kalman filter (EnKF); (5) Adaptive Kalman filter (AKF) ; (6) Switching Kalman filter (SKF) ; (7) Fuzzy Kalman Filter (FKF) ; (8) Extended Kalman filter (EKF) ; (9) Motor EKF (MEKF) ; (10) Hybrid EKF (HEKF) ; (11) Augmented state EKF ; (12) Modified covariance EKF (MV-EKF) ; (13) Iterative Adaptive EKF (IA-EKF) ; (14) Unscented Kalman filter (UKF) ; (15) Dual UKF (DUKF) ; (16) Recursive UKF ; (17) Square root UKF (SR-UKF) ; (18) Kinematic Kalman filter (KKF) ; (19) Multidimensional kinematic Kalman filter (MD-KKF) ; (20) Fuzzy logic controller Kalman filter (FLC-KF) ; (21) Neural Network aided EKF (NN-EKF) ; and (22) Particle swarm optimization aided EKF (PSO-EKF). |

|

|

|

|

3. S.Y. Chen, G.J. Luo, Xiaoli Li, S.M. Ji, B.W. Zhang, "The Specular Exponent as a Criterion for Appearance Quality Assessment of Pearl-Like Objects by Artificial Vision", IEEE Transactions on Industrial Electronics, Vol. 59, No. 8, 2012, pp. 3264-3272. [SCI: 922KL (IF=5.1/5.5, TOP1)] (HTML, DOI) [长文:基于人工视觉和物体反射指数的光滑物体外观质量评估] |

|

|

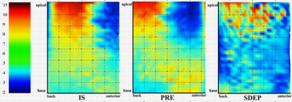

Abstract—For pearls and other smooth alike lustrous jewels, the apparent shininess is one of the most important factors of beauty. This paper proposes an approach to automatic assessment of spherical surface quality in measure of shininess and smoothness using artificial vision. It traces a light ray emitted by a point source and images the resulting highlight patterns reflected from the surface. Once the reflected ray is observed as a white-clipping level in the camera image, the direction of the incident ray is determined and the specularity is estimated. As the specular exponent is the most important reason of surface shininess, the method proposed can efficiently determine the equivalent index of appearance for quality assessment. The observed highlight spot and specular exponent measurement described in this paper provide a way to measure the shininess and to relate the surface appearance with white clipped image highlights. This is very useful to industrial applications for automatic classification of spherical objects. Both numerical simulations and practical experiments are carried out. Results of objective and subjective comparison show its satisfactory consistency with expert visual inspection. It also demonstrates the feasibility in practical industrial systems. |

|

|

|

|

|

4. S.Y. Chen and Z.J. Wang, "Acceleration Strategies in Generalized Belief Propagation", IEEE Transactions on Industrial Informatics, Vol. 8, No. 1, 2012, pp. 41-48. [SCI:881XZ (IF=3.0, Q1)] (HTML, DOI, SourceCode) [长文:广义置信度传播的加速算法(比原来加速200倍左右)][源代码和测试数据下载] |

|

|

Abstract—Generalized Belief Propagation is a popular algorithm to perform inference on large scale Markov Random Fields (MRFs) networks. This paper proposes the method of accelerated generalized belief propagation (AGBP) with three strategies to reduce the computational effort. Firstly, a min-sum messaging scheme and a caching technique are used to improve the accessibility. Secondly, a direction set method is used to reduce the complexity of computing clique messages from quartic to cubic. Finally, a coarse-to-fine hierarchical state space reduction method is presented to decrease redundant states. The results show that a combination of these strategies can greatly accelerate the inference process in large scale MRFs. For common stereo matching, it results in a speed-up of about 200 times. |

|

|

|

|

|

5. S.Y. Chen, J.H. Zhang, Y.F. Li, J.W. Zhang, "A Hierarchical Model Incorporating Segmented Regions and Pixel Descriptors for Video Background Subtraction", IEEE Transactions on Industrial Informatics, Vol. 8, No. 1, Feb. 2012, pp. 118-127. [SCI:881XZ (IF=3.0, Q1)] (HTML, DOI) [长文:在背景去除算法中取得最高的效率,同时对绝大多视频取得最高或者接近最高的正确率] |

|

|

Abstract—Background subtraction is important for detecting moving objects in videos. Currently, there are many approaches to performing background subtraction. However, they usually neglect the fact that the background images consist of different objects whose conditions may change frequently. In this paper, a novel Hierarchical Background Model (HBM) is proposed based on segmented background images. It first segments the background images into several regions by the mean-shift algorithm. Then a hierarchical model, which consists of the region models and pixel models, is created. The region model is a kind of approximate Gaussian mixture model extracted from the histogram of a specific region. The pixel model is based on the cooccurrence of image variations described by histograms of oriented gradients of pixels in each region. Benefiting from the background segmentation, the region models and pixel models corresponding to different regions can be set to different parameters. The pixel descriptors are calculated only from neighboring pixels belonging to the same object. The experimental results are carried out with a video database to demonstrate the effectiveness, which is applied to both static and dynamic scenes by comparing it with some well-known background subtraction methods. *The efficiency is best among state-of-the-art methods for detecting foreground and the accuracy is also almost best. |

|

|

|

|

|

6. S.Y. Chen, Q. Guan, "Parametric Shape Representation by a Deformable NURBS Model for Cardiac Functional Measurements", IEEE Transactions on Biomedical Engineering, Vol. 58, No. 3, Mar. 2011, pp. 480-487. [SCI: 725QW (IF=2.5); ESI] (HTML, DOI) [长文:基于可变形NURBS模型的参数化形体表达及用于心脏功能计算分析] |

|

|

Abstract—This paper proposes a method of parametric representation and functional measurement of three-dimensional cardiac shapes in a deformable NURBS model. The representation makes it very easy to automatically evaluate the functional parameters and myocardial kinetics of the heart since quantitative analysis can be followed in a simple way. In the model, local deformation and motion on the cardiac shape are expressed in adjustable parameters. Especially, an effective integral algorithm is used for volumetric measurement of a NURBS shape since the volume is the most basic parameter in cardiac functional analysis. This method promises the numerical computation very convenient, efficient, and accurate, by comparison with traditional methods. Practical experiments are carried out and results show the algorithm can get satisfactory measurement accuracy and efficiency. The parametric NURBS model in cylindrical coordinates is not only very suitable to fit the anatomical surfaces of a cardiac shape, but also easy for geometric transformation and nonrigid registration and able to represent local dynamics and kinetics, and thus can easily be applied for quantitative and functional analysis of the heart. |

|

|

|

|

|

7. S.Y. Chen and Y.F. Li "Determination of Stripe Edge Blurring for Depth Sensing", IEEE Sensors Journal, Vol. 11, No. 2, Feb. 2011, pp. 389-390. [SCI:681ZS, Journal Best Paper Award] (HTML, DOI) [一种边界模糊程度计算方法及用于深度感知] |

|

|

Abstract—Estimation of the blurring effect is very important for many imaging systems. This letter reports an idea to efficiently and robustly compute the blurring parameter on certain stripe edges. Two formulas are found to determine the degree of imaging blur only by calculating the area sizes under the corresponding profile curves, without the need for deconvolution or transformation over the image. The method can be applied to many applications such as vision sensing of scene depth. A 3D vision system is taken as an implementation instance. |

|

|

|

|

|

8. S.Y. Chen, Y.F. Li, J.W. Zhang, "Vision Processing for Realtime 3D Data Acquisition Based on Coded Structured Light", IEEE Transactions on Image Processing, Vol. 17, No. 2, Feb. 2008, pp. 167-176. [SCI: 252OC (IF=3.3, Q1); ESI] (PDF, HTML, DOI) [长文:基于编码结构光的计算机视觉信息处理和实时三维数据获取] |

|

|

Abstract—Structured light vision systems have been successfully used for accurate measurement of 3D surfaces in computer vision. However, their applications are mainly limited to scanning stationary objects so far since tens of images have to be captured for recovering one 3D scene. This paper presents an idea for real-time acquisition of 3D surface data by a specially coded vision system. To achieve 3D measurement for a dynamic scene, the data acquisition must be performed with only a single image. A principle of uniquely color-encoded pattern projection is proposed to design a color matrix for improving the reconstruction efficiency. The matrix is produced by a special code sequence and a number of state transitions. A color projector is controlled by a computer to generate the desired color patterns in the scene. The unique indexing of the light codes is crucial here for color projection since it is essential that each light grid be uniquely identified by incorporating local neighborhoods so that 3D reconstruction can be performed with only local analysis of a single image. A scheme is presented to describe such a vision processing method for fast 3D data acquisition. Practical experimental performance is provided to analyze the efficiency of the proposed methods. |

|

|

|

|

|

9. S.Y. Chen and Y.F. Li "Vision Sensor Planning for 3-D Model Acquisition", IEEE Transactions on Systems, Man and Cybernetics, Part B, Vol. 35, No. 5, Oct. 2005, pp. 894-904. [SCI: 971KZ (IF=3.0, Q1)] (PDF, HTML, DOI) [长文:基于视觉感知规划的三维模型重建] |

|

|

Abstract—A novel method is proposed in this paper for automatic acquisition of three-dimensional models of unknown objects by an active vision system, in which the vision sensor is to be moved from one viewpoint to the next around the target to obtain its complete model. In each step, sensing parameters are determined automatically for incrementally building the 3D target models. The method is developed by analyzing the target’s trend surface, which is the regional feature of a surface for describing the global tendency of change. Whilst previous approaches to trend analysis are usually focused on generating polynomial equations for interpreting regression surfaces in three dimensions, this paper proposes a new mathematical model for predicting the unknown area of the object surface. A uniform surface model is established by analyzing the surface curvatures. Furthermore, a criterion is defined to determine the exploration direction and an algorithm is developed for determining the parameters of the next view. Implementation of the method is carried out to validate the proposed method. |

|

|

|

|

|

10. S.Y. Chen and Y.F. Li "Automatic Sensor Placement for Model-Based Robot Vision", IEEE Transactions on Systems, Man and Cybernetics, Part B, Vol. 34, No. 1, pp. 393-408, Feb 2004. [SCI:767QR (IF=3.0, Q1)] (PDF, HTML, DOI) [长文:机器人视觉中基于模型的自动感知器布置] |

|

|

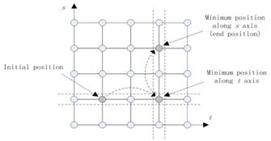

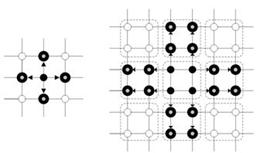

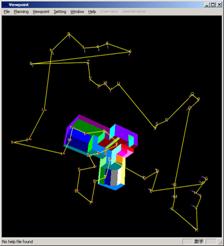

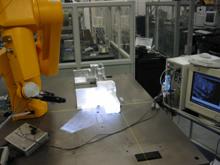

Abstract—This paper presents a method for automatic sensor placement for model-based robot vision. In such a vision system, the sensor often needs to be moved from one pose to another around the object to observe all features of interest. This allows multiple 3D images to be taken from different vantage viewpoints. The task involves determination of the optimal sensor placements and a shortest path through these viewpoints. During the sensor planning, object features are resampled as individual points attached with surface normals. The optimal sensor placement graph is achieved by a genetic algorithm in which a min-max criterion is used for the evaluation. A shortest path is determined by Christofides algorithm. A Viewpoint Planner is developed to generate the sensor placement plan. It includes many functions, such as 3D animation of the object geometry, sensor specification, initialization of the viewpoint number and their distribution, viewpoint evolution, shortest path computation, scene simulation of a specific viewpoint, parameter amendment. Experiments are also carried out on a real robot vision system to demonstrate the effectiveness of the proposed method. |

|

|

|

|

|

11. Y.F. Li and S.Y. Chen, "Automatic Recalibration of an Active Structured Light Vision System", IEEE Transactions on Robotics, Vol. 19, No.2, April 2003, pp. 259-268. [SCI:663WF (IF=3.063, TOP-Q1)] (PDF, HTML, DOI) (No.1 in Robotics!) [长文:主动结构光视觉系统的自复标定方法] |

|

|

Abstract—A structured light vision system using pattern projection is useful for robust reconstruction of 3D objects. One of the major tasks in using such a system is the calibration of the sensing system. This paper presents a new method by which a 2DOF structured light system can be automatically recalibrated, if and when the relative pose between the camera and the projector is changed. A distinct advantage of this method is that neither an accurately designed calibration device nor the prior knowledge of the motion of the camera or the scene is required. Several important cues for self-recalibration, including geometrical cue, illumination cue, and focus cue, are explored. The sensitivity analysis shows that high accuracy in depth value can be achieved with this calibration method. Some experimental results are presented to demonstrate the calibration technique. |

|

|

|

|

|

12. S. Wen, W. Zheng, J. Zhu, X. Li, S.Y. Chen*, "Elman Fuzzy Adaptive Control for Obstacle Avoidance of Mobile Robots using Hybrid Force/Position Incorporation", IEEE Transactions on Systems, Man, and Cybernetics, Part C, Vol. 42, No. 4, 2012, pp. 603-608. [SCI: 962XW (IF=2.1)] (HTML, DOI) |

|

|

Abstract—This paper addresses a virtual force field between mobile robots and obstacles to keep them away with a desired distance. An online learning method of hybrid force/position control is proposed for obstacle avoidance in a robot environment. An Elman neural network is proposed to compensate the effect of uncertainties between the dynamic robot model and the obstacles. Moreover, this paper uses an Elman fuzzy adaptive controller to adjust the exact distance between the robot and the obstacles. The effectiveness of the proposed method is demonstrated by simulation examples.

|

|

|

|

|

|

13. Honghai Liu, S.Y. Chen, Naoyuki Kubota, "Special Section on Intelligent Video Systems and Analytics", IEEE Transactions on Industrial Informatics, Vol. 8, No. 1, 2012, p. 90. [SCI:881XZ (IF=3.0, 14/60-Q1)] (PDF, DOI) |

|

|

Abstract—Intelligent video systems and analytics have been a substantially growing request in the past five years driven by applications in transportation, healthcare, etc. IMS research confirms that since 2004 the video analytics market has been a 65% annual compound growth and predicts the sector will be worth £215 million in 2009. It challenges both academic researchers and industrial practitioners to timely provide analytics theory and systems solutions to meet the overwhelming global need. The state of the art in computer vision, computational intelligence has confirmed that algorithms and software will make a substantial contribution to practical solutions to video analysis and applications in the next five years. Hence, it is timely to bring the ideas, solutions of the worldwide research community into common platform, to present the latest advances and developments in embedded video systems design, compression modeling, behavior understanding, abnormal detection, real-time performance and practical implementation of intelligent video systems and analytics. This Special Section highlights the state of the art and future challenges via some selected papers. |

|

|

|

|

|

14. Shengyong Chen, Youfu Li, and Ngai Ming Kwok, "Active vision in robotic systems: A survey of recent developments", The International Journal of Robotics Research, Vol. 30, No. 11, Sep. 2011, pp. 1343 – 1377. [SCI:833ZQ (IF=4.1, TOP-Q1)] (PDF, HTML, DOI) (No.1 in Robotics!) [35页超长文:机器人主动视觉的研究发展,2011年9月被阅读次数最多] |

|

|

Abstract—This paper provides a broad survey on developments of active vision in robotic applications over the last 15 years. With the increasing demand for robotic automation, research in this area has received much attention. Among the many factors that attribute to a high performing robotic system, the planned sensing or acquisition of perceptions on the operating environment is a crucial component. The aim of sensor planning is to determine the pose and settings of vision sensors for undertaking a vision-based task that usually requires obtaining multiple views of the object to be manipulated. Planning for robot vision is a complex problem for an active system due to its sensing uncertainty and environmental uncertainty. This paper describes such problems arising from many applications, e.g. object recognition and modeling, site reconstruction and inspection, surveillance, tracking and search, as well as robotic manipulation and assembly, localization and mapping, navigation and exploration. A bundle of solutions and methods have been proposed to solve these problems in the past. They are summarized in this review while enabling readers to easily refer solution methods for practical applications. Representative contributions, their evaluations, analyses, and future research trends are also addressed in an abstract level. |

|

|

|

|

|

15. S.Y. Chen, Y.F. Li, Q. Guan, and G. Xiao , "Real-time three-dimensional surface measurement by color encoded light projection", Applied Physics Letters, Volume 89, Issue 11, 11 September 2006, pp. 111108. [SCI: 084PI (IF=4.3, TOP-Q1)] (PDF, HTML, DOI) [通过彩色编码投影的实时三维表面测量] |

|

|

Abstract—Existing noncontact methods for surface measurement suffer from the disadvantages of poor reliability, low scanning speed, or high cost. We present a method for real-time three-dimensional data acquisition by a color-coded vision sensor composed of common components. We use a digital projector controlled by computer to generate desired color light patterns. The unique indexing of the light codes is a key problem and is solved in this study so that surface perception can be performed with only local pattern analysis of the neighbor color codes in a single image. Experimental examples and performance analysis are provided. |

|

|

|

|

|

16. Z. Teng, J. He, A.J. Degnan, S.Y. Chen, N.S. Bahaei, J. Rudd, and J.H. Gillard, "Critical mechanical conditions around neovessels in carotid atherosclerotic plaque may promote intraplaque hemorrhage", Vol. 223, No. 2, August 2012, pp. 321–326. [SCI: (IF=7.215, TOP-Q1)] (PDF, HTML, DOI) [1区TOP,长文:颈动脉粥样硬化斑块的临界力学条件和破裂因素] |

|

|

Abstract—Objective ► Intraplaque hemorrhage is an increasingly recognized contributor to plaque instability. Neovascularization of plaque is believed to facilitate the entry of inflammatory and red blood cells (RBC). Under physiological conditions, neovessels are subject to mechanical loading from the deformation of atherosclerotic plaque by blood pressure and flow. Local mechanical environments around neovessels and their relevant pathophysiologic significance have not yet been examined. Highlights► High mechanical stress and stretch around the neovessles within the carotid atherosclerotic plaque were discovered. ► Neovessels surrounded by red blood cells (presumably evidence of fresh hemorrhage) underwent a much larger stress and stretch than those without red blood cells present nearby. ► These critical mechanical condition may damage the neovessles' wall promoting the formation of intraplaque hemorrhage. |

|

|

|

|

|

17. Z. Teng, A.J. Degnan, U. Sadat, F. Wang, V.E. Young, M.J. Graves, S.Y. Chen and J.H. Gillard, "Characterization of healing following atherosclerotic carotid plaque rupture in acutely symptomatic patients: an exploratory study using in vivo cardiovascular magnetic resonance", Journal of Cardiovascular Magnetic Resonance, Vol.13, Article ID 64, Oct. 27, 2011. [SCI:847NI (IF=4.328, TOP-Q1)] (PDF, HTML, DOI) [长文:动脉粥样硬化和颈动脉匾破裂特性‑‑活体心血管核磁反应试探性研究] |

|

|

Abstract—Background ► Carotid plaque rupture, characterized by ruptured fibrous cap (FC), is associated with subsequent cerebrovascular events. However, ruptured FC may heal following stroke and convey decreased risk of future events. This study aims to characterize the healing process of ruptured FC by assessing the lumen conditions, quantified by the lumen curvature and roughness, using in vivo carotid cardiovascular magnetic resonance (CMR). Conclusions ► Carotid plaque healing can be assessed by quantification of the lumen curvature and roughness and the incidence of recurrent cerebrovascular events may be high in plaques that do not heal with time. The assessment of plaque healing may facilitate risk stratification of recent stroke patients on the basis of CMR results. |

|

|

|

|

|

18. S.Y. Chen, J. Zhang, H. Zhang, Q. Guan, Y. Du, C. Yao, J.W. Zhang, "Myocardial Motion Analysis for Determination of Tei-Index of Human Heart", Sensors, Vol.10, No.12, 2010, pp. 11428-11439. [SCI:700CB] (PDF, HTML, DOI) (invited) [长文:通过心肌运动分析发明了Tei指数计算方法] |

|

|

Abstract—The Tei index, an important indicator of heart function, lacks a direct method to compute because it is difficult to directly evaluate the isovolumic contraction time (ICT) and isovolumic relaxation time (IRT) from which the Tei index can be obtained. In this paper, based on the proposed method of accurately measuring the cardiac cycle physical phase, a direct method of calculating the Tei index is presented. The experiments based on real heart medical images show the effectiveness of this method. Moreover, a new method of calculating left ventricular wall motion amplitude is proposed and the experiments show its satisfactory performance.

|

|

|

|

|

|

19. H. Shi, W. Wang, N.M. Kwok, S.Y. Chen*, "Game Theory for Wireless Sensor Networks: A Survey", Sensors, Sensors, Vol. 12, No.7, 9055-9097, 2012. [SCI] (PDF, HTML, DOI) |

|

|

Abstract—Game theory (GT) is a mathematical method that describes the phenomenon of conflict and cooperation between intelligent rational decision-makers. In particular, the theory has been proven very useful in the design of wireless sensor networks (WSNs). This article surveys the recent developments and findings of GT, its applications in WSNs, and provides the community a general view of this vibrant research area. We first introduce the typical formulation of GT in the WSN application domain. The roles of GT are described that include routing protocol design, topology control, power control and energy saving, packet forwarding, data collection, spectrum allocation, bandwidth allocation, quality of service control, coverage optimization, WSN security, and other sensor management tasks. Then, three variations of game theory are described, namely, the cooperative, non-cooperative, and repeated schemes. Finally, existing problems and future trends are identified for researchers and engineers in the field. |

(1) Cooperative game theory (2) Non-cooperative game theory (3) Repeated game theory (4) Coalitional game theory (5) Evolutionary game theory (extended) (6) Gur game (7) Bargaining game (8) Dynamic Bayesian game (9) TU game (transferable-utility game) (10) NTU game (non-transferable-utility game) (11) Ping-pong game (12) Zero-Sum game and Non-Zero-Sum game (13) Jamming game

|

|

|

|

|

20. Maria Petrou, Mohamed Jaward, Shengyong Chen and Mark Briers, "Super-resolution in practice: the complete pipeline from image capture to super-resolved subimage creation using a novel frame selection method", Machine Vision and Applications, Vol. 23, No. 3, May 2012, pp. 441-459. [SCI:924QM] (DOI) |

|

|

Abstract—We present a complete super-resolution system using a camera, that is assumed to be on a vibrating platform and continually capturing frames of a static scene, that have to be super-resolved in particular regions of interest. In a practical system the shutter of the camera is not synchronized with the vibrations it is subjected to. So, we propose a novel method for frame selection according to their degree of blurring and we combine a tracker with the sequence of selected frames to identify the subimages containing the region of interest. The extracted subimages are subsequently co-registered using a state of the art sub-pixel registration algorithm. Further selection of the co-registered subimages takes place, according to the confidence in the registration result. Finally, the subimage of interest is super-resolved using a state of the art super-resolution algorithm. The proposed frame selection method is of generic applicability and it is validated with the help of manual frame quality assessment. |

|

|

|

|

|

21. S.Y. Chen, et al. (writers) Active Sensor Planning for Multiview Vision Tasks (in English), Springer, Berlin, Germany, ISBN: 978-3-540-77071-8, 1027k chars, 278 pages, Apr. 2008. [英文专著, 国际著名出版社, 3600 chapter downloads] |

|

|

Abstract—The problem of active sensor planning was firstly addressed about 20 years ago and attracted many people after then. Recently, active sensing becomes even more important than ever since a number of advanced robots are available now and many tasks require to act actively for obtaining 3D visual information from different aspects. Just like human beings, it's unimaginable if without active vision even only in one minute. Being active, the active sensor planner is able to manipulate sensing parameters in a controlled manner and performs active behaviors, such as active sensing, active placement, active calibration, active model construction, active illumination, etc. Active vision perception is an essential means of fulfilling such vision tasks that need take intentional actions, e.g. entire reconstruction of an unknown object or dimensional inspection of an industrial workpiece. ... The purpose of this book is to introduce the challenging problems and propose some possible solutions. The main topics addressed are from both theoretical and technical aspects, including sensing activity, configuration, calibration, sensor modeling, sensing constraints, sensing evaluation, viewpoint decision, sensor placement graph, model based planning, path planning, planning for unknown environment, incremental 3D model construction, measurement, and surface analysis. |

|

|

|

|

|

22. S.Y. Chen, Sheng Liu, et al. (writers) Implementation of Computer Vision Technology using OpenCV (in Chinese), Science Press, Beijing. ISBN 978-7-03-021210-8, 598k words, 478 pages, May 2008. [陈胜勇, 刘盛. (编著), 基于OpenCV的计算机视觉技术实现(第3次印刷, 被下载2万多次), 科学出版社, 北京. ISBN:9787030212108, 598k words, 478页, 2008.5.] |

|

|

编辑推荐: 本书内容共分18章,涉及200多个技术问题,覆盖了基于OpenCV基础编程的大部分内容。利用大量生动有趣的编程案例、编程技巧,从答疑解惑和解决问题入手,以因特网上最新资料为蓝本,以简洁明快的语言、清晰直观的条理,全面地对OpenCV编程过程中常见问题及故障给予了具体解决办法和答案。深入浅出地说明了OpenCV中最典型和用途最广的程序设计方法。 作者注:实际上没有说得那么好,惭愧! 五年前写的了,随便看看吧。有时间再重新写本好点的。 |

|

|

|

|

|

23. Yujun Zhen, Haihe Shi, S.Y. Chen (writers), Algorithm Design (in Chinese), Posts & Telecom Press, Beijing. ISBN 978-7-115-27435-9, 393k words, 232 pages, May 2012. [郑宇军, 石海鹤, 陈胜勇 编著(21世纪高等学校计算机规划教材-精品系列), 算法设计, 人民邮电出版社, 北京. ISBN:9787115274359, 39.3万字, 232页, 2012.5] |

|

|

《算法设计》由郑宇军、石海鹤、陈胜勇编著,以设计策略为主线,循序渐进地介绍了经典算法设计(包括分治、动态规划、贪心、回溯、迭代改进等算法)、NP完全理论、非精确型算法设计(包括近似算法、参数化算法,随机算法),以及现代智能优化方法。在知识讲解中强调算法思维与编程实践并重,注重培养学生运用算法技术解决实际工程问题的能力。《算法设计》可作为计算机科学及相关专业的本科和研究生教材,也可供软件开发人员学习参考。书中的算法提供多种语言的源代码下载。为提高教学效果,本书提供配套的教学课件,并配有专门的“算法设计教学演示软件”,欢迎授课教师使用。 |

|

|

|

|

|

24. S.Y. Chen, H. Tong, Carlo Cattani, "Markov models for image labeling", Mathematical Problems in Engineering, Vol. 2012, AID 814356, 2012, 18 pages. [SCI:830HW] (PDF, HTML, DOI) |

|

|

Abstract—Markov random field (MRF) is a widely used probabilistic model for expressing interaction of different events. One of the most successful applications is to solve image labeling problems in computer vision. This paper provides a survey of recent advances in this field. We give the background, basic concepts, and fundamental formulation of MRF. Two distinct kinds of discrete optimization methods, i.e. belief propagation and graph cut, are discussed. We further focus on the solutions of two classical vision problems, i.e. stereo and binary image segmentation using MRF model. |

|

|

|

|

|

|

|

|

|

|

|

|

|