Sydney, NSW, Australia

nmkwok@unsw.edu.au

Abstract

This paper provides a broad survey on developments of active vision in robotic applications over the last 15 years. With the increasing demand for robotic automation, research in this area has received much attention. Among the many factors that attribute to a high performing robotic system, the planned sensing or acquisition of perceptions on the operating environment is a crucial component. The aim of sensor planning is to determine the pose and settings of vision sensors for undertaking a vision-based task that usually requires obtaining multiple views of the object to be manipulated. Planning for robot vision is a complex problem for an active system due to its sensing uncertainty and environmental uncertainty. This paper describes such problems arising from many applications, e.g. object recognition and modeling, site reconstruction and inspection, surveillance, tracking and search, as well as robotic manipulation and assembly, localization and mapping, navigation and exploration. A bundle of solutions and methods have been proposed to solve these problems in the past. They are summarized in this review while enabling readers to easily refer solution methods for practical applications. Representative contributions, their evaluations, analyses, and future research trends are also addressed in an abstract level.

KEY WORDS— active vision, sensor placement, purposive perception planning, robotics, uncertainty, viewpoint scheduling, computer vision

1. Introduction

About 20 years ago, Bajcsy, Cowan, Kovesi, etc. discussed the important concept of active perception. Together with other researchers' initial contributions at that time, the new concept (compared with the Marr paradigm in 1982) on active perception, and consequently the sensor planning problem, was thus initiated in active vision research. The difference between the concepts of active perception and the Marr paradigm is that the former considers vision perception as the intentional action of the mind, while the latter considers it as the procedural process of the matter.

Therefore, active perception mostly encourages the idea of moving a sensor to constrain interpretation of its environment. Since multiple three-dimensional (3D) images need to be taken and integrated from different vantage points to enable all features of interest to be measured, sensor placement which determines the viewpoints with a viewing strategy thus becomes critically important for achieving full automation and high efficiency. Today, the roles of sensor planning can be widely found in most autonomous robotic systems (Chen, Li & Zhang, 2008a).

Active sensor planning is an important means for fulfilling vision tasks that require intentional actions, e.g. complete reconstruction of an unknown object or dimensional inspection of a workpiece. Constraint analysis, active sensor placement, active sensor configuration, 3D data acquisition, and robot action planning are the essential steps in developing such active vision systems.

Research in active vision is concerned with determining the pose and configuration for the visual sensor, plays an important role in robot vision not only because a 3D sensor has a limited field of view and can only see a portion of a scene from a single viewpoint, but also because a global description of objects often cannot be reconstructed from only one viewpoint due to occlusion. Multiple viewpoints have to be planned for many vision tasks to make the entire object strategically visible.

By taking active actions in robotic perception, the vision sensor is purposefully configured and placed at several positions to observe a target. The intentional actions in purposive perception planning introduce active behaviors or purposeful behaviors. The robots with semantic perception can take intentional actions according to its set goal such as going to a specific location or obtaining the full information of an object. The action to be taken depends on the environment and the robot’s own current state. However, difficulties often arise due to sensor noises and the presence of unanticipated obstacles in the workplace. To this end, a strategic plan is needed to complete a vision task, such as navigating through an office environment or modeling an unknown object.

In this paper, we review the advances in active vision technology broadly. Overall, significant progress has been made in several areas, including new techniques for industrial inspection, object recognition, security and surveillance, site modeling and exploration, multi-sensor coordination, mapping, navigation, tracking, etc. Due to space limitations, this review mainly focuses on the introduction of ideas and high-level strategies.

The scope of this paper is very broad across the field of robotics. The term active vision defined in this paper is equivalent to the situation if and only if the robots have to adopt strategies for decisions of sensor placement (replacement) or sensor configuration (reconfiguration). It can be used for either general purposes, or specific tasks.

Actually, no review of this nature can cite every paper that has been published. We include what we believe to be a representative sampling of important work and broad trends from the recent 15 years. In many cases, we provide references in order to better summarize and draw distinctions among key ideas and approaches. For further information regarding the early contributions in this topic, it is suggested to follow the other intensive review in 1995 (Tarabanis, Allen & Tsai, 1995a).

The remainder of this paper is structured as follows: Section 2 briefly gives an overview of related contributions. Section 3 introduces the tasks, problems, and applications of active vision methods. Section 4 discusses the available methods and solutions to specific tasks. We conclude in Section 5 and offer our impressions of current and future trends on the topic.

2. Overview of Contributions

In the literature, there are about 2000 research papers published during 1986-2010, which are tightly related to the topic of active vision perception in robotics, including sensor modeling and optical constraints, definition of best next view, placement strategy, and illumination planning. The number of 2010 records is not complete since we searched the publications only in the first quarter and most articles have not come into the indexing databases yet. Fig. 1 shows the yearly distribution of the published papers. We can find from the plot that: (1) the subject emerged around 1988 and developed rapidly in the first 10 years, thanks to the new concept of “active vision”; (2) it reaches to the first top in 1998; (3) the subject was cool down a little, probably due to the reasons of many difficulties related to “intelligence”; (4) it became very active again since five years ago because of its wide applications; (5) we are currently on the second peak.

Fig. 1. Yearly published records from 1986 to 2010

With regard to the research themes, there are several directions that researcher had adopted in the past. In Table I, we list several classes that categorize the related work of active vision perception; according to target knowledge, sensor type, task or application, approach, evaluation objective, and planning dimensions.

Table I Categories of active vision applications

|

Knowledge |

model-based |

partial |

no priori |

|

|

Sensor |

intensity camera |

range sensor |

both |

others |

|

Task |

inspection |

navigation |

modeling |

recognition |

|

Approach |

generate & test |

synthesis |

graph |

AI |

|

Objective |

visibility |

accuracy |

efficiency |

cost |

|

Dimensions |

1D |

2D |

2.5D |

3D |

2.1. Representative Work

Active vision has very wide applications in robotics. Here we summarize these in the following list where we can find its most significant roles: purposive sensing, object and site modeling, robot localization and mapping, navigation, path planning, exploration, surveillance, tracking, search, recognition, inspection, robotic manipulation, assembly and disassembly, and other purposes.

For the methods used in solving active vision problems, we can also find the tremendous diversity. The mostly used ones are: generate and test, synthesis, sensor simulation, hypothesis and verification, graph theory, cooperative network, space tessellation, geometrical analysis, surface expectation, coverage and occlusion, tagged roadmap, visibility analysis, next best view, volumetric space, probability and entropy, classification and Bayesian reasoning, learning and knowledge-based, sensor structure, dynamic configuration, finite element, gaze and attention, lighting control, fusion, expert system, multi-agent, evolutionary computation, soft computing, fuzzy inference, neural network, basic constraints, and task-driven.

Table II Representative contributions

|

Purpose/Task |

Method |

Representative |

|

inspection |

constraint analysis |

(Trucco, Umasuthan, Wallace & Roberto, 1997; Tarabanis, Tsai & Allen, 1995b; Dunn, Olague & Lutton, 2006) |

|

inspection |

genetic algorithm and graph |

(Chen & Li, 2004) |

|

surveillance |

linear programming, coverage |

(Sivaram, Kankanhalli & Ramakrishnan, 2009), (Bottino, Laurentini & Rosano, 2009) |

|

grasping |

Kalman filter |

(Motai & Kosaka, 2008) |

|

search |

probability |

(Shubina & Tsotsos, 2010) |

|

tracking |

geometrical |

(Barreto, Perdigoto, Caseiro & Araujo, 2010) |

|

exploration |

uncertainty driven |

(Whaite & Ferrie, 1997) |

|

reinforcement learning |

reinforcement learning |

(Kollar & Roy, 2008, Royer, Lhuillier, Dhome & Lavest, 2007) |

|

site modeling |

prediction and verification |

(Reed & Allen, 2000; Chang & Park, 2009; Blaer & Allen, 2009; Marchand & Chaumette, 1999b) |

|

object modeling |

next best view |

(Banta, Wong, Dumont & Abidi, 2000; Chen & Li, 2005; Pito, 1999) |

|

object modeling |

information entropy, rule based |

(Li & Liu, 2005), (Kutulakos & Dyer, 1995) |

|

recognition |

optimal visibility |

(de Ruiter, Mackay & Benhabib, 2010) |

|

recognition |

probabilistic |

(Farshidi, Sirouspour & Kirubarajan, 2009; Roy, Chaudhury & Banerjee, 2005) |

|

path planning |

roadmap |

(Baumann, Dupuis, Leonard, Croft & Little, 2008; Zhang, Ferrari & Qian, 2009) |

|

general |

random occlusion |

(Mittal & Davis, 2008) |

|

camera-lighting |

geometrical |

(Marchand, 2007) |

|

multirobot formation |

graph theory |

(Kaminka, Schechter-Glick & Sadov, 2008) |

Among the huge varieties of tasks and methods, we extract a few representative contributions in Table II for easy appreciation of the state of the art.

2.2. Further Information

For a quick understanding of the related work, it is recommended to read the representative contributions listed in Table II. For further intensive tracking of the literature, the following reviews reflect different aspects of the topic:

(1) Review of active recognition (Arman & Aggarwal, 1993);

(2) Review of industrial inspection (Newman & Jain, 1995);

(3) Review of sensor planning in early stage (Tarabanis et al., 1995a);

(4) Review of 3D shape measurement with active sensing (Chen, Brown & Song, 2000);

(5) Review of surface reconstruction from multiple range images (Zhang, Peng, Shi & Hu, 2000);

(6) Review and comparison of view planning techniques for 3D object reconstruction and inspection (Scott, Roth & Rivest, 2003);

(7) Review of free-form surface inspection techniques (Li & Gu, 2004);

(8) Review of active recognition through next view planning (Roy, Chaudhury & Banerjee, 2004);

(9) Review of 3D measurement quality metrics by environmental factors (MacKinnon, Aitken & Blais, 2008b);

(10) Review of multimodal sensor planning and integration for wide area aurveillance (Abidi, Aragam, Yao & Abidi, 2008);

(11) Review of computer-vision-based fabric defect detection (Kumar, 2008).

Of course, purposive perception planning remains an open problem in the community. The task of finding a suitably small set of sensor poses and configurations for specified reconstruction or inspection goals is extremely important for autonomous robots. The ultimate solution is unlikely existing since the complicated problems always need better solutions along with the development of artificial intelligence.

3. Tasks and Problems

Active vision endows the robot capable of actively placing the sensor at several viewpoints through a planning strategy. It inevitably became a key issue in active systems because the robot had to decide "where to look". In an active vision system, the visual sensor has to be moved frequently for purposeful visual perception. Since the targets may vary in size and distance to the camera and the task requirements may also change in observing different objects or features, a structure-fixed vision sensor is usually insufficient. For a structured light vision sensor, the camera needs to be able to "see" just the scene illuminated by the projector. Therefore the configuration of a vision setup often needs to be changed to reflect the constraints in different views and achieve optimal acquisition performance. On the other hand, a reconfigurable sensor can change its structural parameters to adapt itself to the scene to obtain maximum 3D information from the target. According to task conditions, the problem is roughly classified into two categories, i.e. model-based and non-model-based tasks.

3.1. Model and Non-model based Approaches

For model-based tasks, especially for industrial inspections, the placements of the sensor need to be determined and optimized before carrying out operations. Generally in these tasks, the sensor planning problem is to find a set of admissible viewpoints within the permissible space, which satisfy all of the sensor placement constraints and can complete the required vision task. In most of the related works, the constraints in sensor placement are expressed as a cost function where the planning is aimed at achieving the minimum cost. However, the evaluation of a viewpoint has normally been achieved previously by direct computation. Such an approach is usually formulated for a particular application and is therefore difficult to be applied to general tasks. For a multi-view sensing strategy, global optimization is desired but was rarely considered in the past (Boutarfa, Bouguechal & Emptoz, 2008).

The most typical task of model-based application is for industrial inspection (Yang & Ciarallo, 2001). Along with the CAD model of the target, a sensing plan is generated to completely and accurately acquire the geometry of the target (Olague, 2002; Sheng, Xi, Song & Chen, 2001b). The sensing plan is comprised of the set of viewpoints that defines the exact position and orientation of the camera relative to the target (Prieto, Lepage, Boulanger & Redarce, 2003). Sampling of object surface and viewpoint space is characterized, including measurement and pose errors (Scott, 2009).

For tasks of observing unknown objects or environments, the viewpoints have to be decided in run-time because there is no prior information about the targets. Furthermore, in an inaccessible environment, the vision agent has to be able to take intentional actions automatically. The fundamental objective of sensor placement in such tasks is to increase the knowledge about the unseen portions of the viewing volume while satisfying all placement constraints such as in-focus, field-of-view, occlusion, collision, etc. An optimal viewpoint planning strategy determines each subsequent vantage point and offers the obvious benefit of reducing and eliminating the labor required to acquire an object's surface geometry. A system without planning may need as many as seventy range images for recovering a 3D model with normal complexity, with significant overlap between them. It is possible to reduce the number of sensing operations to less than ten with a proper sensor planning strategy. Furthermore, it also makes it possible to create a more accurate and complete model by utilizing a physics-based model of the vision sensor and its placement strategy.

The most typical task of non-model based application is for target modeling (Banta et al., 2000). Online planning is required to decide where to look (Lang & Jenkin, 2000) for site modeling (Reed & Allen, 2000) or real-time exploration and mapping (Kollar & Roy, 2008). Of the published literature in active vision perception over the years, (Cowan & Kovesi, 1988) is one of the earliest research on this problem in 1988 although some primary works can be found in the period 1985-1987.

To date, there are more than 2000 papers. At the early stage, these works were focused on sensor modeling and constraint analysis. In the first 10 years, most of these research works were model-based and usually for applications in automatic inspection or recognition. The generate-and-test method and the synthesis method are mostly used. In the recent 10 years, while optimization was still in development for model-based problems, the importance is being increasingly realized in planning viewpoints for unknown objects or no a priori environment because this is very useful for many active vision tasks such as site modeling, surveillance, and autonomous navigation. The tasks and problems are summarized in this section separately.

3.2. Purposive Sensing

The purposive sensing in robotic tasks is to obtain better images for robot understanding. Efficiency and accuracy are often primarily concerned in acquisition of 3D images (Chen, Li & Zhang, 2008b; Li & Wee, 2008; Fang, George & Palakal, 2008). Taking the most common example of using stereo image sequences during robot movement, not all input images contribute equally to the quality of the resultant motion. Since several images may often contain similar and hence overly redundant visual information. This leads to unnecessarily increased processing times. On the other hand, a certain degree of redundancy can help to improve the reconstruction in more difficult regions of a model. Hornung et al. proposed an image selection scheme for multi-view stereo which results in improved reconstruction quality compared to uniformly distributed views (Hornung, Zeng & Kobbelt, 2008).

People have also sought methods for determining the probing points for efficient measurement and reconstruction of freeform surfaces (Li & Liu, 2003). For an object that has a large surface or a local steep profile, a variable resolution optical profile measurement system that combined two CCD cameras with zoom lenses, one line laser and a three-axis motion stage was constructed (Tsai & Fan, 2007). The measurement system can flexibly zoom in or out the lens to measure the object profile according to the slope distribution of the object. Model-based simulation system is helpful for planning numerically controlled surface scanning (Wu, Suzuki & Kase, 2005). The scanning-path determination is equivalent to the solution of next best view in this aspect (Sun, Wang, Tao & Chen, 2008).

In order to obtain a minimal error in 3D measurements (MacKinnon, Aitken & Blais, 2008a), an optimization design of the camera network in photogrammetry is useful in 3D reconstruction from several views by triangulation (Olague & Mohr, 2002). The combination of laser scanners and touch probes can potentially lead to more accurate, faster, and denser measurements. To overcome the conflict between efficiency and accuracy, Huang and Qian developed a dynamic sensing-and-modeling approach for integrating a tactile point sensor and an area laser scanner to improve the measurement speed and quality (Huang & Qian, 2007).

Spatial uncertainty and resolution are the primary metrics of image quality; however, spatial uncertainty is affected by a variety of environmental factors. A review of how researchers attempted to quantify these environmental factors can be found in (MacKinnon et al., 2008b), along with spatial uncertainty and resolution, has provided an illustration of a wide range of quality metrics.

For reconstruction in large scenes having large depth ranges with depth discontinuities, an idea is available to integrate coarse-to-fine image acquisition and estimation from multiple cues (Das & Ahuja, 1996).

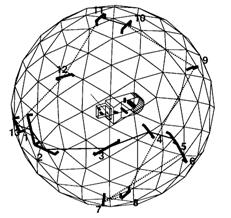

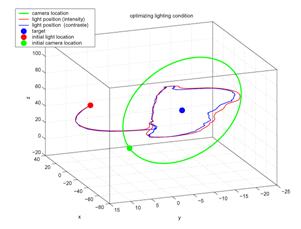

For construction of realistic models, simultaneous capture of the geometry and texture (Treuillet, Albouy & Lucas, 2007) is inevitable. The quality of the 3D reconstruction depends not only on the complexity of the object but also on its environment. Good viewing and illumination conditions ensure image quality and thus minimize the measurement error. Belhaoua et al. argued the placement problem of lighting sources moving within a virtual geodesic sphere containing the scene, with the aim to find positions leading to minimum errors for the subsequent 3D reconstruction (Belhaoua, Kohler & Hirsch, 2009; Liu, 2009). It is also found that automatic light source placement plays an important role for maximum visual information recovery (Vazquez, 2007).

3.2.1. Object Modeling

In order to reconstruct an object completely and accurately (Shum, Hebert, Ikeuchi & Reddy, 1997; Banta et al., 2000; Lang & Jenkin, 2000; Doi, Sato & Miyake, 2005; Li & Liu, 2005), besides the way is to determine the scanning path (Wang, Zhang & Sun, 2009; Larsson & Kjellander, 2008), multiple images have to be acquired from different views (Pito, 1999). An increasing number of views generally improve the accuracy of the final 3D model but it also increases the time needed to build the model. The number of the possible views can, in principle, be infinite. Therefore, it makes sense to try to reduce the number of needed views to a minimum while preserving a certain accuracy of the model, especially in applications for which the efficiency is an important issue. Approaches to Next View Planning not only can get 3D shapes with minimal views (Sablatnig, Tosovic & Kampel, 2003; Zhou, He & Li, 2008), but also is especially useful for acquisition of large-scale indoor and outdoor scenes (Blaer & Allen, 2007) or interior and exterior model (Null & Sinzinger, 2006), even with partial occlusions (Triebel & Burgard, 2008).

For minimizing the number of images for complete 3D reconstruction where no prior information about the objects is available, in the literature techniques are explored based on characterizing the shapes to be recovered in terms of visibility and number and nature of cavities (He & Li, 2006b; Lin, Liang & Wu, 2007; Loniot, Seulin, Gorria & Meriaudeau, 2007; Chen & Li, 2005; Zetu & Akgunduz, 2005; Pito, 1999).

Typically, Callieri et al. designed a system to reduce the three main bottlenecks in human-assisted 3D scanning: the selection of the range maps to be taken (view planning), the positioning of the scanner in the environment, and the range maps' alignment. The system is designed around a commercial laser-based 3D scanner moved by a robotic arm. The acquisition session is organized in two stages. First, an initial sampling of the surface is performed by automatic selection of a set of views. Then, some added views are automatically selected, acquired and merged to the initial set in order to fill the surface regions left unsampled (Callieri, Fasano, Impoco, Cignoni, Scopigno, Parrini et al. 2004). Similar techniques of free-form surface scanning can be found in (Huang & Qian, 2008b; Huang & Qian, 2008a; Fernandez, Rico, Alvarez, Valino & Mateos, 2008).

The strategy of viewpoint selection for global 3D reconstruction of unknown objects in (Jonnalagadda, Lumia, Starr & Wood, 2003) has four steps: local surface feature extraction, shape classification, viewpoint selection and global reconstruction. An active vision system (Biclops) with two cameras constructed for independent pan/tilt axes, extracts 2D and 3D surface features from the scene. These local features are assembled into simple geometric primitives. The primitives are then classified into shapes, which are used to hypothesize the global shape of the object. The next viewpoint is chosen to verify the hypothesized shape. If the hypothesis is verified, some information about global reconstruction of a model can be stored. If not, the data leading up to this viewpoint is re-examined to create a more consistent hypothesis for the object shape.

Unless using 3D reconstruction from unordered viewpoints (Liang & Wong, 2010), incremental modeling is the common choice for complete automation of scanning an unknown object. An incremental model, representing object surface and workspace occupancy is combined together with an optimization strategy, in selecting the best scanning viewpoints and generates adaptive collision-free scanning trajectories. The optimization strategy attempts to select the viewpoints that maximize the knowledge of the object taking into account the completeness of the current model and the constraints associated with the sensor (Martins, Garcia-Bermejo, Zalama & Peran, 2003).

Most methods for model acquisition require the combination of partial information from different viewpoints in order to obtain a single, coherent model. This, in turn, requires the registration of partial models into a common coordinate frame, a process that is usually done off-line. As a consequence, holes due to undersampling and missing information often cannot be detected until after the registration. Liu and Heidrich introduced a fast, hardware-accelerated method for registering a new view to an existing partial geometric model in a volumetric representation. The method performs roughly one registration every second, and is therefore fast enough for on-the-fly evaluation by the user (Liu & Heidrich, 2003). A procedure is also recently proposed to identify missing areas from the initial scanning data from default positions and to locate additional scanning orientations to fill the missing areas (Chang & Park, 2009). On the contrary, He and Li et al. prefer to a self-determination criterion to inform the robot when the model is complete (He & Li, 2006a; Li, He & Bao, 2005; Li, He, Chen & Bao, 2005).

3.2.2. Site Modeling

It is very time-consuming to construct detailed models of large complex sites by manual process. Therefore, in tasks of modeling unstructured environments (Craciun, Paparoditis & Schmitt, 2008), especially in wide outdoor area, perception planning is required to reduce unobserved portions (Asai, Kanbara & Yokoya, 2007). One of the main drawbacks is determining how to guide the robot and where to place the sensor to obtain complete coverage of a site (Reed & Allen, 2000; Blaer & Allen, 2009). To estimate the computation complexity, if the size of one dimension of the voxel space is n, then there could be O(n2) potential viewing locations. If there are m boundary unseen voxels, the cost of the algorithm could be as high as O(n2´m) (Blaer & Allen, 2009).

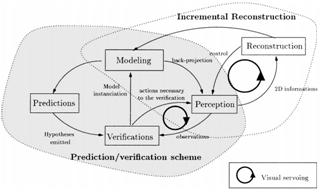

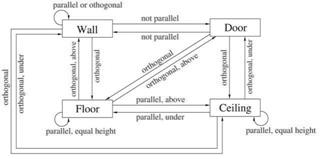

For static scenes, the perception-action cycles can be handled at various levels: from the definition of perception strategies for scene exploration down to the automatic generation of camera motions using visual servoing. Marchand and Chaumette use a structure from controlled motion method which allows an optimal estimation of geometrical primitive parameters (Marchand & Chaumette, 1999b). The whole reconstruction/exploration process has three main perception-action cycles (Fig. 2). It contains the internal perception-action cycle which ensures the reconstruction of a single primitive, and a second cycle which ensures the detection, the successive selection, and finally the reconstruction of all the observed primitives. It partially solves the occlusion problem and obtains a high level description of the scene.

In field environments, it is usually not possible to provide robotic systems with valid/complete geometric models of the task and environment. The robot or robot teams will need to create these models by performing appropriate sensor actions. Additionally, the robot(s) will need to position their sensors in a task directed optimum way. The Instant Scene Modeler (iSM) is a vision system for generating calibrated photo-realistic 3D models of unknown environments quickly using stereo image sequences (Se & Jasiobedzki, 2007). Equipped with iSM, unmanned ground vehicles (UGVs) can capture stereo images and create 3D models to be sent back to the base station, while they explore unknown environments. An algorithm based on iterative sensor planning and sensor redundancy is proposed by Sujan et al. to enable robots to efficiently position their cameras with respect to the task/target (Sujan & Dubowsky, 2005a). Intelligent and efficient strategy is developed for unstructured environment (Sujan & Meggiolaro, 2005).

Fig. 2 The Prediction/Verification scheme for scene exploration (Marchand & Chaumette, 1999b)

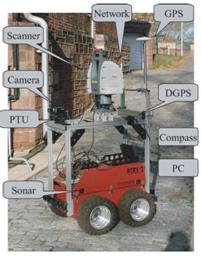

A field robot for site modeling is usually equipped with ranger sensors, DGPS/Compass, Inertial Measurement Unit (IMU), odometers, etc., e.g. the iSM (Se & Jasiobedzki, 2007). More sensors are set up with the AVENUE, for localizing and navigating itself through various environments (Fig. 3).

3.3. Surveillance

Since vision contains much higher information content than other sensors in describing the scene, cameras are frequently applied for surveillance purposes. In these tasks, cameras can be installed in fixed locations and directed, through pan-tilt manipulations, toward the target in an active manner. On the other hand, cameras can be installed on mobile platforms. Surveillance is also tightly connected with target search and tracking where the active vision principle is regarded as an important attribute.

3.3.1. Surveillance with a set of fixed cameras

This problem was addressed in (Sivaram et al., 2009) concerned with how to select the optimal combination of sensors and how to determine their optimal placement in a surveillance region in order to meet the given performance requirements at a minimal cost for a multimedia surveillance system. Therefore, the sensor configuration for surveillance applications calls for coverage optimization (Janoos, Machiraju, Parent, Davis & Murray, 2007; Yao, Chen, Abidi, Page, Koschan & Abidi, 2010). The goal in such problems is to develop a strategy of network design (Saadatseresht & Varshosaz, 2007).

Locating sensors in 2D can be modeled as an Art Gallery problem (Bottino & Laurentini, 2006b; Howarth, 2005; Bodor, Drenner, Schrater & Papanikolopoulos, 2007). Consider the external visibility coverage for polyhedra under the orthographic viewing model. The problem is to compute whether the whole boundary of a polyhedron is visible from a finite set of view directions, and if so, how to compute a minimal set of such view directions (Liu & Ramani, 2009). Bottino et al. provide detailed formulation and solution in their research (Bottino & Laurentini, 2008; Bottino et al., 2009).

3.3.2. Surveillance with mobile robots

Mobile sensors can be used to provide complete coverage of a surveillance area for a given threat over time, thereby reducing the number of sensors required. The surveillance area may have a given threat profile as determined by the kind of threat, and accompanying meteorological, environmental, and human factors (Ma, Yau, Chin, Rao & Shankar, 2009). UGVs equipped with surveillance cameras present a flexible complement to the numerous stationary sensors being used in security applications today (Hernandez & Wang, 2008; Ulvklo, Nygards, Karlholm & Skoglar, 2004). However, to take full advantage of the flexibility and speed offered by a group of UGV platforms, a fast way to compute desired camera locations to cover an area or a set of buildings, e.g., in response to an alarm, is needed (Nilsson, Ogren & Thunberg, 2009) (Nilsson, Ogren & Thunberg, 2008).

Such surveillance systems aim to design an optimal deployment of vision sensors (Angella, Reithler & Gallesio, 2007; Nayak, Gonzalez-Argueta, Song, Roy-Chowdhury & Tuncel, 2008; Lim, Davis & Mittal, 2006). System reconfiguration is sometimes necessary for the autonomous surveillance of a target as it travels through a multi-object dynamic workspace with an a priori unknown trajectory (Bakhtari, Mackay & Benhabib, 2009; Bakhtari & Benhabib, 2007; Bakhtari, Naish, Eskandari, Croft & Benhabib, 2006).

Autonomous patrolling robots are to have significant contributions in security applications for surveillance purposes (Cassinis & Tampalini, 2007; Briggs & Donald, 2000). In the near future robots will also be used in home environments to provide assistance for the elderly and challenged people (Nikolaidis, Ueda, Hayashi & Arai, 2009; Biegelbauer et al., 2010).

In monitoring applications (Sakane, Kuruma, Omata & Sato, 1995; Mackay & Benhabib, 2008a), Schroeter et al. present a model based system for a mobile robot to find an optimal pose for the observation of a person in indoor living environments. The observation pose is derived from a combination of the camera position and view direction as well as further parameters like the aperture angle. The optimal placement of a camera is required because of the highly dynamic range of the scenes near windows or other bright light sources, which often results in poor image quality due to glare or hard shadows. The method tries to minimize these negative effects by determining an optimal camera pose based on two major models: A spatial free space model and a representation of the lighting (Schroeter, Hoechemer, Mueller & Gross, 2009). A recent review of multimodal sensor planning and integration for wide area surveillance can be found in (Abidi et al., 2008).

3.3.3. Search

Object search is also a model-based vision task which is to find a given object in a known or unknown environment. The object search task not only needs to perform object recognition and localization, but also involves sensing control, environment modeling, and path planning (Wang et al., 2008; Shimizu, Yamamoto, Wang, Satoh, Tanahashi & Niwa, 2005).

The task is often complicated by the fact that portions of the area are hidden from the camera view. Different viewpoints are necessary to observe the target. As a consequence, viewpoint selection for search tasks seems similar to viewpoint selection for data acquisition of an unknown scene. The problem of visual matching was shown to be NP-complete (Ye & Tsotsos, 1999). It has exponential time complexity relative to the size of the image. Suppose one wishes a robot to search for and locate a particular object in a 3D world. A direct search certainly suffices for the solution. Assuming that the target may lie with equal probability at any location, the viewpoint selection problem is resolved by moving a camera to take images of the previously not viewed portions of the full 3D space. This kind of exhaustive, brute-force approach can suffice for a solution; however, it is both computationally and mechanically prohibitive.

In practice, sensor planning is very important for object search since a robot needs to interact intelligently and effectively with the environment. Visual attention may be a mechanism that optimizes the search processes inherent in vision, but attention itself is a complex phenomenon (Shubina & Tsotsos, 2010). The utility of a search operation f is given by

![]() (1)

(1)

where t(f) is the time when action f takes. The knowledge about the potential target locations is encoded as a target probability distribution p(ci,τ). The goal is to select an operation with the highest utility value. Since the cost of each action is approximately the same if the robot is stationary, the next action is selected in such a way that it maximizes the numerator of (1) (Shubina & Tsotsos, 2010).

With the assumption of a realistic, high dimensional and continuous state space for the representation of objects expressing their rotation, translation and class, Eidenberger et al. present an exclusively parametric approach for the state estimation and decision making process to achieve very low computational complexity and short calculation times (Eidenberger, Grundmann, Feiten & Zoellner, 2008).

3.3.4. Tracking

Active tracking is a part of the active vision paradigm (Riggs, Inanc & Weizhong, 2010), where visual systems adapt themselves to the observed environment in order to obtain extra information or perform a task more efficiently. An example of active tracking is fixation, where camera control assures that the gaze direction is maintained on the same object over time. A general approach for the simultaneous tracking of multiple moving targets using a generic active stereo setup is studied in (Barreto et al., 2010). The problem is formulated for objects on a plane, where cameras are modeled as line scan cameras, and targets are described as points with unconstrained motion.

Dynamically reconfigurable vision systems have been suggested in an online mode (Reddi & Loizou, 1995; Wang, Hussein & Erwin, 2008), as effective solutions for achieving this objective, namely, relocating cameras to obtain optimal visibility for a given situation. To obtain optimal visibility of a 3D object of interest, its six DOF position and orientation must be tracked in real time. An autonomous, real-time, six DOF tracking system for a priori unknown objects should be able to (1) select the object, (2) build its approximate 3D model and use this model to (3) track it in real time (de Ruiter et al., 2010).

Zhu and Sakane developed an adaptive panoramic stereovision approach for localizing 3D moving objects (Zhou & Sakane, 2003). The research focuses on cooperative robots involving cameras that can be dynamically composed into a virtual stereovision system with a flexible baseline in order to detect, track, and localize moving human subjects in an unknown indoor environment. It promises an effective way to solve the problems of limited resources, view planning, occlusion, and motion detection of movable robotic platforms. Theoretically, two interesting conclusions are given. (1) If the distance from the main camera to the target, D1, is significantly greater (e.g., ten times greater) than the size of the robot (R), the best geometric configuration is

![]() (2)

(2)

where B is the best baseline distance for minimum distance error and f1 is the main camera’s inner angle of the triangle formed by the two robots and the target. (2) The depth error of the adaptive stereovision is proportional to D1.5 where D is the camera-target distance, which is better than the case of the best possible fixed baseline stereo in which depth error is proportional to the square of the distance (D2).

Some problems like camera fixation, object capturing and detecting, and road following involve tracking or fixating on 3D points and features (Biegelbauer, Vincze & Wohlkinger, 2010). The solutions to these problems also require an analysis of depth and motion. Theoretical approaches based on optical flow are the most common solution to these problems (Han, Choi & Lee, 2008; Raviv & Herman, 1994).

Vision tracking systems for surveillance and motion capture rely on a set of cameras to sense the environment (Chen & Davis, 2008). There is a decision problem which corresponds to answering the question: can the target escape the observer’s view? Murrieta-Cid et al. defined this problem and considered to maintain surveillance of a moving target by a nonholonomic mobile observer (Murrieta-Cid, Muoz & Alencastre, 2005). The observer's goal is to maintain visibility of the target from a predefined, fixed distance. An expression derived for the target velocities is:

![]() (3)

(3)

where q and f are the observer’s orientation, u1 and u3 are moving speeds, and l is the predefined surveillance distance.

To maintain the fixed required distance between the target and the observer, the relationship between the velocity of the target and the linear velocity of the observer is

![]() (4)

(4)

The

above equation defines an ellipse in the u1-u3 plane and

the constraint on u1 and u3 is that they should be inside

the ellipse while supposing![]() . They deal specifically with the

situation in which the only constraint on the target's velocity is a bound on the

speed, and the observer is a nonholonomic, differential drive system having

bounded speed. The system model is developed to derive a lower bound for the

required observer speed.

. They deal specifically with the

situation in which the only constraint on the target's velocity is a bound on the

speed, and the observer is a nonholonomic, differential drive system having

bounded speed. The system model is developed to derive a lower bound for the

required observer speed.

To dynamically manage the viewpoint of a vision system for optimal 3D tracking, Chen and Li adopt the effective sample size in the proposed particle filter as a criterion for evaluating tracking performance and employ it to guide the view-planning process for finding the best viewpoint configuration. The vision system is designed and configured to maintain a largest number of effective particles, which minimizes tracking error by revealing the system to a better swarm of importance samples and interpreting posterior states in a better way (Chen & Li, 2009; Chen & Li, 2008).

3.4. Mobile Robotics

In applications involving the deployment of mobile robots, it is a fundamental requirement that the robot is able to take perception of its navigation environment. When cameras are equipped on mobile robots, it enables the robot to observe its workspace and active vision naturally becomes a very desirable ability to improve the autonomy of these machines.

3.4.1. Localization and Mapping

As a problem of determining the position of a robot or its vision sensor, localization has been recognized as one of the most fundamental problems in mobile robotics (Caglioti, 2001; Flandin & Chaumette, 2002). Mobile robots often determine their actions according to their positions. Thus, their observation strategies are mainly for self-localization (Mitsunaga & Asada, 2006). The aim of localization is to estimate the position of a robot in its environment, given local sensorial data. Stereo vision-based 3D localization is used in a semi-automated excavation system for partially buried objects in unstructured environments by (Maruyama, Takase, Kawai, Yoshimi, Takahashi & Tomita, 2010). Autonomous navigation is also possible in outdoor situations with the use of a single camera and natural landmarks (Royer et al., 2007; Chang, Chou & Wu, 2010).

Fig. 3 The ATRV-2 AVENUE-based mobile robot for site modeling (Blaer & Allen, 2009)

Zingaretti and Frontoni present an efficient metric for appearance-based robot localization (Zingaretti & Frontoni, 2006). This metric is integrated in a framework that uses a partially observable Markov decision process as position evaluator, thus allowing good results even in partially explored environments and in highly perceptually aliased indoor scenarios. More details of this topic are related to the research on simultaneous localization and mapping (SLAM) which is also a challenging problem and has been widely investigated (Borrmann, Elseberg, Lingemann, Nüchter & Hertzberg, 2008; Borrmann et al., 2008; Frintrop & Jensfelt, 2008a; Nüchter & Hertzberg, 2008; Gonzalez-Banos & Latombe, 2002).

In intelligent transportation systems, vehicle localization usually relies on Global Positioning System (GPS) technology; however the accuracy and reliability of GPS are degraded in urban environments due to satellite visibility and multipath effects. Fusion of data from a GPS receiver and a machine vision system can help to position the vehicle with respect to objects in its environment (Rae & Basir, 2009).

In robotics, maps are metrical and sometimes topological. A map contains space-related information about the environment, i.e., not all that a robot may know or learn about its world need go into the map. Metric maps are supposed to represent the environment geometry quantitatively correctly, up to discretization errors (Nüchter & Hertzberg, 2008).

Again for the SLAM problem (Kaess & Dellaert, 2010; Ballesta, Gil, Reinoso, Julia & Jimenez, 2010), the goal is to integrate the information collected during navigation into the most accurate map possible. However, SLAM does not address the sensor-placement portion of the map-building task. That is, given the map built so far where should the robot go next? In (Gonzalez-Banos & Latombe, 2002), an algorithm is proposed to guide the robot through a series of "good" positions, where "good" refers to the expected amount and quality of the information that will be revealed at each new location. This is similar to the next-best-view, (NBV) problem. However, in mobile robotics the problem is complicated by several issues, two of which are particularly crucial. One is to achieve safe navigation despite an incomplete knowledge of the environment and sensor limitations. The other is the need to ensure sufficient overlap between each new local model and the current map, in order to allow registration of successive views under positioning uncertainties inherent to mobile robots. They described an NBV algorithm that uses the safe-region concept to select the next robot position at each step. The new position is chosen within the safe region in order to maximize the expected gain of information under the constraint that the local model at this new position must maintain a minimal overlap with the current global map (Gonzalez-Banos & Latombe, 2002).

Besides individual scans are registered into a coherent 3D geometry map by SLAM, semantic knowledge can help an autonomous robot act goal-directedly, then, consequently, part of this knowledge has to be related to objects, functionalities, events, or relations in the robot's environment. A semantic map for a mobile robot is a map that contains, in addition to spatial information about the environment, assignments of mapped features to entities of known classes (Nüchter & Hertzberg, 2008).

While considerable progress has been made in the area of mobile networks by SLAM or NBV, a framework that allows the vehicles to reconstruct target based on a severely underdetermined data set is rarely addressed. Recently, Mostofi and Sen present a compressive cooperative mapping framework for mobile exploratory networks. The cooperative mapping of a spatial function is based on a considerably small observation set where a large percentage of the area of interest is not sensed directly (Mostofi & Sen, 2009).

3.4.2. Navigation, Path Planning and Exploration

For exploring unknown environments, many robotic systems use topological structures as a spatial representation. If localization is done by estimate of the global pose from landmark information, robotic navigation is tightly coupled to metric knowledge. On the other hand, if localization is based on weaker constraints, e.g. the similarity between images capturing the appearance of places or landmarks, the navigation can be controlled by a homing algorithm. Similarity based localization can be scaled to continuous metric localization by adding additional constraints (Hubner & Mallot, 2007; Baker & Kamgar-Parsi, 2010; Hovland & McCarragher, 1999; Whaite & Ferrie, 1997; Sheng, Xi, Song & Chen, 2001a; Kim & Cho, 2003).

If the environment is partially unknown, the robot needs to explore its work-space autonomously. Its task is to incrementally build up a representation of its surroundings (Suppa & Hirzinger, 2007; Wang & Gupta, 2007). Local navigation strategy has to be implemented for unknown environment exploration (Amin, Tanoto, Witkowski, Ruckert & bdel-Wahab, 2008; Thielemann, Breivik & Berge, 2010; Radovnikovich, Vempaty & Cheok, 2010).

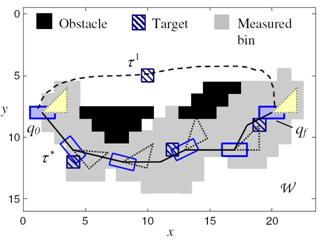

Fig. 4 An example of sensor path planning (Zhang et al., 2009), where both the location and geometry of targets and obstacles must be accounted for in planning the sensor path.

For navigation in an active way, an UGV is usually equipped with a “controllable” vision head, e.g. a stereo camera on pan/tilt mount (Borenstein, Borrell, Miller & Thomas, 2010; Banish, Rodgers, Hyatt, Edmondson, Chenault, Heym et al. 2010). Kristensen presented the problem of autonomous navigation in partly known environments (Kristensen, 1997). Bayesian decision theory was adopted in the sensor planning approach. The sensor modalities, tasks, and modules were described separately and Bayes decision rule was used to guide the behavior. The decision problem for one sensor was constructed with a standard tree for myopic decision. In other aspects, indoor navigation using adaptive neuro-fuzzy controller is addressed in (Budiharto, Jazidie & Purwanto, 2010) and path recognition for outdoor navigation is addressed in (Shinzato, Fernandes, Osorio & Wolf, 2010).

The problem of path planning for a robotic sensor in (Zhang et al., 2009) is assumed with a platform geometry AÌR2, and a field-of-view geometry SÌR2, that navigates a workspace WÌR2 for the purpose of classifying multiple fixed targets based on posterior and prior sensor measurements, and environmental information (Fig. 4). The robotic sensor path τ must simultaneously achieve multiple objectives including: (1) avoid all obstacles in W; (2) minimize the traveled distance; and (3) maximize the information value of path (τ), i.e. the measurement set along a path τ. The robotic sensor performance is defined by an additive reward function:

R(τ) = wV V(τ) − wD D(τ) (5)

where, V(τ) is the information value of path (τ), and D(τ) is the distance traveled along τ. The constants wV and wD weigh the trade-off between the values of the measurements and the traveled distance. Then, the Geometric Sensor Path Planning Problem is defined as:

Problem: Given a layout W and a joint probability mass function P, find a path τ* for a robotic sensor with platform A and field-of-view S that connects the two ends, and maximizes the profit of information defined in (1) (Zhang et al., 2009).

In active perception for exploration, navigation, or path planning, there is a situation that the robot has to work in a dynamic environment and the sensing process may associate with many noises or uncertainties. Research in this issue has become the most active in recent years. A reinforcement learning scheme is proposed for exploration in (Kollar & Roy, 2008). Occlusion-free path planning are studied in (Baumann et al., 2008; Nabbe & Hebert, 2007; Baumann, Leonard, Croft & Little, 2010; Oniga & Nedevschi, 2010).

3.5. Robotic Manipulations

The use of robotic manipulators had shown a boost in manufacturing productivity. This increase depends critically on the simplicity that the robot manipulator can be re-configured or re-programmed to perform various tasks. To this end, actively placing the camera to guide the manipulator motion has become a key component of automatic robotic manipulator systems.

3.5.1. Robotic Manipulation

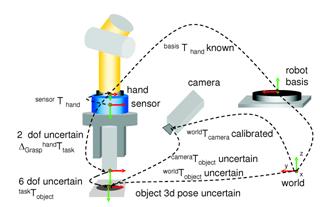

Vision guided approaches are designed to robustly achieve high precision in manipulation (Nickels, DiCicco, Bajracharya & Backes, 2010; Miura & Ikeuchi, 1998) or to improve the productivity (Park et al., 2006). For the assembly/disassembly tasks, a long-term aim in robot programming is the automation of the complete process chain, i.e. from planning to execution. One challenge is to provide solutions which are able to deal with position uncertainties (Fig. 5) (Thomas, Molkenstruck, Iser & Wahl, 2007). Nelson et al. introduced a dynamic sensor planning method (Nelson & Papanikolopoulos, 1996). They used an eye-in-hand system and considered the resolution, field-of-view, depth-of-view, occlusions, and kinematic singularities. A controller was proposed to combine all the constraints into a system and resulted in a control law. Kececi et al. employed an independently mobile camera with a 6-DOF robot to monitor a disassembly process so that it can be planned (Kececi et al., 1998). A number of candidate view-poses are being generated and subsequently evaluated to determine an optimal view pose. A good view-pose is defined with the criterion which prevents possible collisions, minimizes mutual occlusions, keeps all pursued objects within the field-of-view, and reduces uncertainties.

Stemmer et al. used a vision sensor, with color segmentation and affine invariant feature classification, to provide the position estimation within the region of attraction (ROA) of a compliance-based assembly strategy (Stemmer, Schreiber, Arbter & Albu-Schaffer, 2006). An assembly planning toolbox is based on a theoretical analysis and the maximization of the ROA. This guarantees the local convergence of the assembly process under consideration of the geometry in part. The convergence analysis invokes the passivity properties of the robot and the environment.

Fig. 5 Vision sensor for solving object poses and uncertainties in the assembly work cell (Thomas et al., 2007)

Object verification (Sun, Sun & Surgenor, 2007), feature detectability (Zussman, Schuler & Seliger, 1994), and real-time accessibility analysis for robotic (Jang, Moradi, Lee, Jang, Kim & Han, 2007) are also major concerns in robotic manipulation. The access direction of the object to grasp can be determined through visibility query (Jang, Moradi, Le Minh, Lee & Han, 2008; Motai & Kosaka, 2008).

3.5.2. Recognition

In many cases, a single view may not contain sufficient features to recognize an object unambiguously (Byun & Nagata, 1996). Therefore, another important application of sensor planning is active object recognition (AOR) which recently attracts much attention within the computer vision community.

In fact, two objects may have all views in common with respect to a given feature set, and may be distinguished only through a sequence of views (Roy, Chaudhury & Banerjee, 2000). Further, in recognizing 3D objects from a single view, recognition systems often use complex feature sets. Sometimes, it may be possible to achieve the same result, incurring less error and smaller processing cost by using a simpler feature set and suitably planning multiple observations. A simple feature set is applicable for a larger class of objects than a model base with a specific complex feature set. Model base-specific complex features such as 3D invariants have been proposed only for special cases. The purpose of AOR is to investigate the use of suitably planned multiple views for 3D object recognition. Hence the AOR system should also take a decision on "where to look". The system developed by Roy et al. is an iterative active perception system that executes the acquisition of several views of the object, builds a stochastic 3D model of the object and decides the best next view to be acquired (Roy et al., 2005).

In computer vision, object recognition problems are often based on single image data processing (SyedaMahmood, 1997; Eggert, Stark & Bowyer, 1995). In various applications this processing can be extended to a complete sequence of images, usually received passively. In (Deinzer, Derichs, Niemann & Denzler, 2009), a camera is selectively moved around a target object. Reliable classification results are desirable with a clearly reduced amount of necessary views by optimizing the camera movement for the access of new viewpoints. The optimization criterion is the gain of class discriminative information when observing the appropriate next image (Roy et al., 2000; Gremban & Ikeuchi, 1994).

While relevant research in active object recognition/pose estimation has mostly focused on single-camera systems, Farshidi et al. propose two multi-camera solutions that can enhance object recognition rate, particularly in the presence of occlusion. Multiple cameras simultaneously acquire images from different view angles of an unknown, randomly occluded object belonging to a set of a priori known objects (Farshidi et al., 2009). Eight objects, as illustrated in Fig. 6, are considered in the experiments with four different pose angles, each 90O apart. Also, five different levels of occlusion have been designated for each camera’s image.

Fig. 6 The objects for active recognition experiments (Farshidi et al., 2009)

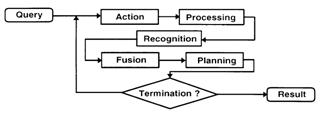

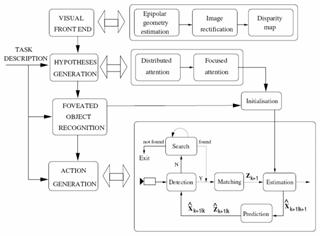

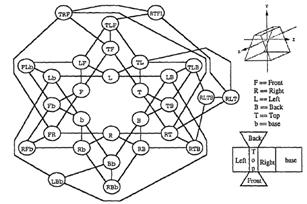

In the early stage, Ikeuchi et al. developed a sensor modeler, called VANTAGE, to place the light sources and cameras for object recognition (Ikeuchi & Robert, 1991; Wheeler & Ikeuchi, 1995). It mostly solves the detectability (visibility) of both light sources and cameras. Borotschnig summarized a framework for appearance-based AOR as in Fig. 7 (Borotschnig & Paletta, 2000).

Among the literature of recognition, many solutions are available (Dickinson, Christensen, Tsotsos & Olofsson, 1997; Arman & Aggarwal, 1993; Kuno, Okamoto & Okada, 1991; Callari & Ferrie, 2001). Typically, we may refer to the fast recognition by learning (Grewe & Kak, 1995) and function-based reasoning (Sutton & Stark, 2008), as well as multi-view recognition of time-varying geometry objects (Mackay & Benhabib, 2008b). A review of sensor planning for active recognition can be found in (Roy et al., 2004).

Fig. 7 The framework of appearance-based active object recognition (Borotschnig & Paletta, 2000)

3.5.3. Inspection

Dimensional inspection using a contact-based coordinate measurement machine is time consuming because the part can only be measured on a point-by-point basis (Prieto, Redarce, Lepage & Boulanger, 2002). The automotive industry has been seeking a practical solution for rapid surface inspection using a 3D sensor. The challenge is the capability to meet all the requirements including sensor accuracy, resolution, system efficiency, and system cost. A robot-aided sensing system can automatically allocate sensor viewing points, measure the freeform part surface, and generate an error map for quality control (Shi, Xi & Zhang, 2010; Shih & Gerhardt, 2006; Bardon, Hodge & Kamel, 2004). Geometric dimension and tolerance inspection process is also needed in industries to examine the conformity of manufactured parts with the part specification defined at the design stage (Gao, Gindy & Chen, 2006; Sebastian, Garcia, Traslosheros, Sanchez & Dominguez, 2007).

In fact, in the literature, sensor planning for the model-based tasks is mostly related to industrial inspection, where a nearly perfect estimate of the object's geometry and possibly its pose are known and the task is to determine how accurately the object has been manufactured (Mason, 1997; Trucco et al., 1997; Sheng et al., 2001b; Yang & Ciarallo, 2001; Wong & Kamel, 2004; Sheng, Xi, Song & Chen, 2003). It was said that this problem in fact was a nonlinear multi-constraint optimization problem (Chen & Li, 2004; Taylor & Spletzer, 2007; Rivera-Rios, Shih & Marefat, 2005; Dunn & Olague, 2004). The problem comprises camera, robot and environmental constraints. A viewpoint is optimized and evaluated by a cost function which uses a probability-based global search technique. It is difficult to compute robust viewpoints which satisfy all feature detectability constraints. Optimization methods such as tree annealing and genetic algorithms are commonly used to compute the viewpoints subjected to multi-constraints (Chen & Li, 2004; Olague & Mohr, 2002; Olague & Dunn, 2007).

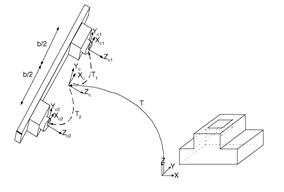

Tarabanis et al. developed a model-based sensor planning system, the machine vision planner (MVP), which works with 2D images obtained from a CCD camera (Tarabanis, Tsai & Kaul, 1996; Tarabanis et al., 1995b). The MVP system takes a synthesis rather than a generate-and-test approach, thus giving rise to a powerful characterization of the problem. In addition, the MVP system provides an optimization framework in which constraints can easily be incorporated and combined. The MVP system attempts to detect several features of interest in the environment that are simultaneously visible, inside the field of view, in focus, and magnified, by determining the domain of admissible camera locations, orientations, and optical settings. A viewpoint is sought that is both globally admissible and central to the admissibility domain (Fig. 8).

Fig. 8 The admissible domain of viewpoints (Tarabanis et al., 1995b)

Based on the work on the MVP system, Abrams et. al. made a further development for planning viewpoints for vision inspection tasks within a robot work-cell (Abrams, Allen & Tarabanis, 1999). The computed viewpoints met several constraints such as detectability, in-focus, field-of-view, visibility, and resolution. The proposed viewpoint computation algorithm also fell into the "volume intersection method" (VIM). This is generally a straightforward but very useful idea. Many of the latest implemented planning systems can be traced back to this contribution. For example, Rivera-Rios et al. present a probabilistic analysis of the effect of the localization errors on the dimensional measurements of the line entities for a parallel stereo setup (Fig. 9). The probability that the measurement error is within an acceptable tolerance was formulated as the selection criterion for camera poses. The camera poses were obtained via a nonlinear program that minimizes the total mean square error of the length measurements while satisfying the sensor constraints (Rivera-Rios et al., 2005).

Fig. 9 Stereo pose determination for dimensional measurement (Rivera-Rios et al., 2005)

In order to obtain a more complete and accurate 3D image of an object, Prieto et al. presented an automated acquisition planning strategy utilizing its CAD model. The work was focused on improving the accuracy of the 3D measured points which is a function of the distance to the object surface and of the laser beam incident angle (Prieto, Redarce, Boulanger & Lepage, 2001; Prieto et al., 2003).

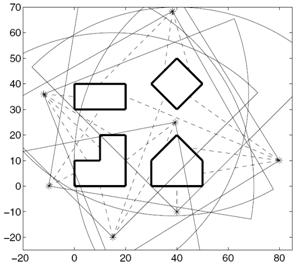

Besides the minimum number of viewpoints is desired in sensor planning, to further improve the efficiency of robot manipulation, we need to reduce the traveling cost of the robot placements (Wang, Krishnamurti & Gupta, 2007; Martins, Garcia-Bermejo, Casanova & Gonzalez, 2005; Chen & Li, 2004). The whole procedure for generating a perception plan is described as: (1) generate a number of viewpoints; (2) reduce redundant viewpoints; (3) if the placement constraints are not satisfied, increase the number of viewpoints; (4) construct a graph corresponding to the space distribution of the viewpoints; and (5) find a shortest path to optimize robot operations.

Automated visual inspection systems are also developed for defect inspection, such as specular surface quality control (Garcia-Chamizo, Fuster-Guillo & zorin-Lopez, 2007), car headlight lens inspection (Martinez, Ortega, Garcia & Garcia, 2008), and others (Perng, Chen & Chang, 2010; Martinez, Ortega, Garcia & Garcia, 2009; Chen & Liao, 2009; Sun, Tseng & Chen, 2010). Related techniques are useful to improve the productivity of assembly lines (Park, Kim & Kim, 2006). Self-reconfiguration (Garcia & Villalobos, 2007a; Garcia & Villalobos, 2007b) and self-calibration (Carrasco & Mery, 2007; Treuillet, Albouy & Lucas, 2009) are also mentioned in some applications. Further information regarding early literatures can be found in the review by (Newman & Jain, 1995).

3.6. General Purpose Tasks

The automatic selection of good viewing parameters is a very complex problem. In most cases the notion of good viewing strongly depends on the concrete application, but some general solutions still exist in a limited extent (Chu & Chung, 2002; Zavidovique & Reynaud, 2007). Commonly, two kinds of viewing parameters must be set for active vision perception: camera parameters and lighting parameters (number of light sources, its position and eventually the orientation of the spot). The former determine how much of the geometry can be captured and the latter influence on how much of it is revealed (Vazquez, 2007).

Some multiview strategies are proposed for different application prospects (Al-Hmouz & Challa, 2005; Fiore, Somasundaram, Drenner & Papanikolopoulos, 2008). Mittal specifically addressed the state-of-the-art in the analysis of scenarios where there are dynamically occurring objects capable of occluding each other. The visibility constraints for such scenarios are analyzed in a multi-camera setting. Also analyzed are other static constraints such as image resolution and field-of-view, and algorithmic requirements such as stereo reconstruction, face detection and background appearance. Theoretical analysis with the proper integration of such visibility and static constraints leads to a generic framework for sensor planning, which can then be customized for a particular task. The analysis may be applied to a variety of applications, especially those involving randomly occurring objects, and include surveillance and industrial automation (Mittal, 2006).

In some robotic vision tasks, such as surveillance, inspection, image based rendering, environment modeling, require multiple sensor locations, or the displacement of a sensor in multiple positions for fully exploring an environment or an object. Edge covering is sufficient for tasks such as inspection or image based rendering. However, the problem is NP-hard, and no finite algorithm is known for its exact solution. A number of heuristics have been proposed, but their performances with respect to optimality are not guaranteed (Bottino et al., 2009). In 2D surveillance, the problem is modeled as an Art Gallery problem. A subclass of this general problem can be formulated in terms of planar regions that are typical of building floor plans. Given a floor plan to be observed, the problem is then to reliably compute a camera layout such that certain task-specific constraints are met. A solution to this problem is obtained via binary optimization over a discrete problem space (Erdem & Sclaroff, 2006). It can also be applied in security systems for industrial automation, traffic monitoring, and surveillance in public places, like museums, shopping malls, subway stations and parking lots, (Mittal & Davis, 2008; Mittal & Davis, 2004).

With visibility analysis and sensor planning in dynamic environments, in which the methods include computing occlusion-free viewpoints (Tarabanis et al., 1996) and feature detectability constraints (Tarabanis, Tsai & Allen, 1994), applications are widely existing in product inspection, assembly, and design in reverse engineering (Yegnanarayanan, Umamaheswari & Lakshmi, 2009; Tarabanis et al, 1995b; Scott, 2009).

In other aspects, an approach was proposed in (Marchand, 2007) to control camera position and/or lighting conditions in an environment using image gradient information. Auto-focusing technique is used by (Quang, Kim & Lee, 2008) in a projector-camera system. Smart cameras are applied by (Madhuri, Nagesh, Thirumalaikumar, Varghese & Varun, 2009). Camera network is designed with dynamic programming by (Lim, Davis & Mittal, 2007).

4. Methods and Solutions

The early work on sensor planning was mainly focused on the analysis of placement constraints, such as resolution, focus, field of view, visibility, and conditions for light source placement in a 2D space. A viewpoint has to be placed in an acceptable space and a number of constraints should be satisfied. The fundamentals in solving such a problem were established in the last decades.

Here the review scope is restricted to some common methods and solutions found in recently published contributions regarding view-pose determination and sensor parameter setting in robotics. It does not include: foveal sensing, hand-eye coordination, autonomous vehicle control, landmark identification, qualitative navigation, path following operation, etc., although these are also issues concerning the active perception problem. We give little consideration to contributions on experimental study (Treuillet et al, 2007), sensor simulation (Loniot et al, 2007; Wu et al, 2005), interactive modeling (Popescu, Sacks & Bahmutov, 2004), and semi-automatic modeling (Liu & Heidrich, 2003) either.

For the methods and solutions listed below, they might be used independently, or as hybrids, in the above-mentioned applications and tasks.

4.1. Formulation of Constraints

An intended view must first satisfy some constraints, either due to the sensor itself, the robot, or its environment. From the work by Cowan et al who made a highlight on the sensor placement problem, detailed descriptions of the acceptable viewpoints for satisfying many requirements (sensing constraints) have to be provided. Tarabanis et al. presented approaches to compute the viewpoints that satisfy many sensing constraints, i.e. resolution, focus, field-of-view, and detectability (Tarabanis et al, 1996; Tarabanis et al, 1995b; Tarabanis et al, 1994). Abrams et al also proposed to compute the viewpoints that satisfy constraints of resolution, focus (depth of field), field-of-view, and detectability (Abrams et al, 1999).

A complete list of constraints is summarized and analyzed by (Chen & Li, 2004). An admissible viewpoint should satisfy as many as nine placement constraints, including the geometrical (G1, G2, G6), optical (G3, G5, G8), reconstructive (G4, G6), and environmental (G9) constraints. These are listed in Table III. Fig. 10 intuitively illustrates several constraints (G1, G2, G3, G5, G7). Considering the 6 points (A - F) on the object surface, it can be seen in the figure that only point A satisfies all the five constraints, while all other points violated one or more of the constraints.

TABLE III SENSOR PLACEMENT CONSTRAINTS (Chen & Li, 2004)

|

Satisfaction |

Constraint |

|

G1 |

Visibility |

|

G2 |

Viewing angle |

|

G3 |

Field of view |

|

G4 |

Resolution constraint |

|

G5 |

In-focus or viewing distance |

|

G6 |

Overlap |

|

G7 |

Occlusion |

|

G8 |

Image contrast (affect (d, f, a) settings) |

|

G9 |

Kinematic reachability of sensor pose |

Fig. 10 Illustration of sensor placement constraints (Chen & Li, 2004)

The formulation of perception constraints is mostly used in model-based vision tasks (Trucco et al, 1997), such as inspection, assembly/disassembly, recognition, and object search (Tarabanis et al, 1995b), but the similar formulation is also valid in non-model based tasks (Chen et al, 2008a; Chen & Li, 2005). For the autonomous selection and modification of camera configurations during tasks, Chu and Chung consider both the camera's visibility and the manipulator's manipulability. The visibility constraint guarantees that the whole of a target object can be "viewed" with no occlusions by the surroundings, and the manipulability constraint guarantees avoidance of the singular position of the manipulator and rapid modification of the camera position. The optimal camera position is determined and the camera configuration is modified such that visual information for the target object can be obtained continuously during the execution of assigned tasks (Chu & Chung, 2002).

4.1.1. Cost Functions

Traditionally for sensor planning, a weighted function is often used for objective evaluation. It includes several components standing for placement constraints. For object model, the NBV was defined as the next sensor pose which would enable the greatest amount of previously unseen three-dimensional information to be acquired (Banta et al, 2000; Li & Liu, 2005). Tarabanis et al chose to formulate the probing strategy as a function minimization problem (Tarabanis et al, 1995b). The optimization function is given as a weighted sum of several component criteria, each of which characterizes the quality of the solution with respect to an associated requirement separately. The optimization function is written as:

![]() (6)

(6)

subject to gi³0, to satisfy four constraints, i.e. the resolution, focus, field-of-view, and visibility.

Equivalently with constraint-based space analysis, for each constraint, the sensor pose is limited to a possible region. Then the viewpoint space is given as the intersection of these regions and the optimization solution is determined by the above function in the viewpoint space, i.e.,

![]() (7)

(7)

In (Marchand & Chaumette, 1999a), the strategy of viewpoint selection took into account three factors: (1) the new observed area volume G(ft+1), (2) the cost function F in order to reduce the total camera displacement C(ft, ft+1), and (3) constraints to avoid unreachable viewpoints and to avoid positions near the robot joint limits B(f). The cost function Fnext to be minimized is defined as a weighted sum of the different measures:

![]() (8)

(8)

Ye and Tsotsos considered the total cost of object search via a function (Ye & Tsotsos, 1999):

![]() (9)

(9)

where the cost to(f) gives the total time needed to manipulate the hardware to the status specified by f , to take a picture, to update the environment and register the space, and to run the recognition algorithm. The effort allocation F = (f1, ..., fk ) gives the ordered set of operations applied in the search.

Chen and Li defined a criterion of lowest traveling cost according to the task execution time

![]() (10)

(10)

where T1 and T2 are constants reflecting the time for image digitalization, image preprocessing, 3D surface reconstruction, fusion and registration of partial models. n is the number of total viewpoints. k is the equivalent sensor moving speed. lc is the total path length of robot operations, which is computed from the sensor placement graph (Chen & Li, 2004).

4.1.2. Data-Driven

In active perception, data-driven sensor planning is to make sensing decisions according to local on-site data characteristics and to deal with environmental uncertainty (Miura & Ikeuchi, 1998; Miura & Ikeuchi, 1998; Whaite & Ferrie, 1997; Callari & Ferrie, 2001; Bodor et al, 2007).

In model-based object recognition, SyedaMahmood presents an approach that uses color as a cue to perform selection either based solely on image-data (data-driven), or based on the knowledge of the color description of the model (model-driven). The color regions extracted form the basis for performing data and model-driven selection. Data-driven selection is achieved by selecting salient color regions as judged by a color-saliency measure that emphasizes attributes that are also important in human color perception. The approach to model-driven selection, on the other hand, exploits the color and other regional information in the 3D model object to locate instances of the object in a given image. The approach presented tolerates some of the problems of occlusion, pose and illumination changes that make a model instance in an image appear different from its original description (SyedaMahmood, 1997).

Mitsunaga and Asada investigated how a mobile robot selected landmarks to make a decision based on an information criterion. They argue that observation strategies should not only be for self-localization but also for decision making. An observation strategy is proposed to enable a robot equipped with a limited viewing angle camera to make decisions without self-localization. A robot can make a decision based on a decision tree and on prediction trees of observations constructed from its experiences (Mitsunaga & Asada, 2006).

4.2. Expectation

Local surface features and expected model parameters are often used in active sensor planning for shape modeling (Flandin & Chaumette, 2001). A strategy developed by Jonnalagadda et al. is to select viewpoints in four steps: local surface feature extraction, shape classification, viewpoint selection and global reconstruction. When 2D and 3D surface features are extracted from the scene, they are assembled into simple geometric primitives. The primitives are then classified into shapes, which are used to hypothesize the global shape of the object and plan next viewpoints (Jonnalagadda et al, 2003).

In purposive shape reconstruction, the method adopted by Kutulakos and Dyer is based on a relation between the geometries of a surface in a scene and its occluding contour: If the viewing direction of the observer is along a principal direction for a surface point whose projection is on the contour, surface shape (i.e., curvature) at the surface point can be recovered from the contour. They use an observer that purposefully changes viewpoint in order to achieve a well-defined geometric relationship with respect to a 3D shape prior to its recognition. The strategy depends on only curvature measurements on the occluding contour (Kutulakos & Dyer, 1994).

Chen and Li developed a method by analyzing the target's trend surface, which is the regional feature of a surface for describing the global tendency of change. While previous approaches to trend analysis usually focused on generating polynomial equations for interpreting regression surfaces in three dimensions, they propose a new mathematical model for predicting the unknown area of the object surface. A uniform surface model is established by analyzing the surface curvatures. Furthermore, a criterion is defined to determine the exploration direction, and an algorithm is developed for determining the parameters of the next view (Chen & Li, 2005).

On the other hand, object recognition does also obviously need to analyze local surface features. The appearance of an object from various viewpoints is described in terms of visible 2D features, which are used for feature search and viewpoint decision (Kuno et al, 1991).

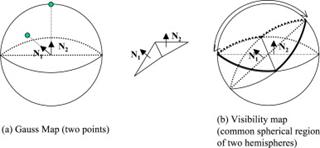

4.2.1. Visibility

A target of feature point must be visible and not occluded in a robotic vision system (Briggs & Donald, 2000; Chu & Chung, 2002). It is essential for real-time robot manipulation in cluttered environments (Zussman et al, 1994; Jang et al, 2008), or adaptation to dynamic scenes (Fiore et al, 2008). Recognition of time-varying geometrical objects or subjects needs to maximize the visibility in dynamic environment (Mackay & Benhabib, 2008b).